Istio VirtualService vs高级路由

来源:原创

时间:2022-02-25

作者:脚本小站

分类:云原生

下例实验前提:

示例中proxy会在内部调用demoapp。

重写和跳转路由示例:

# redirect 是通知客户端让客户端重新发送请求 # rewrite 是服务端进行的 # 下面的路由意思是:请求demoapp服务的情况下,带有/canary的重写路径然后到demoapp服务, # 带有/backend 路径的跳转到backend服务 # 其余流量都到default上 apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: rewrite match: - uri: prefix: /canary # 当访问这个路径时 rewrite: uri: / # 通过rewrite到“/”路径 route: - destination: host: demoapp # 最后到这个服务 subset: v11 - name: redirect match: - uri: prefix: /backend # 当访问这个路径时 redirect: uri: / # 通过重定向到“/”路径 authority: backend # 最后到这个服务,这里的authority和使用destination的效果是一样的 port: 8082 - name: default # 默认服务,其他的请求路径都路由到这个服务 route: - destination: host: demoapp subset: v10 # 选择的是v10子集,子集是在DestinationRule中定义的

基于权重的金丝雀发布:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: weight-based-routing route: - destination: host: demoapp subset: v10 weight: 90 # 90%的权重到v10版本 - destination: host: demoapp subset: v11 weight: 10 # 10%的权重到v11版本 --- apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: demoapp namespace: default spec: host: demoapp subsets: - labels: version: v1.0 name: v10 - labels: version: v1.1 name: v11

根据请求头来路由:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: canary match: - headers: x-canary: exact: "true" route: - destination: host: demoapp subset: v11 # 路由到v11版本的流量 headers: # 定义在destination下的只对当前destination生效 request: set: User-Agent: Chrome # 请求头中添加此标头 response: add: x-canary: "true" # 响应标头中添加此标头 - name: default headers: # 定义在name下的表示对所有的route都生效 response: add: x-Envoy: test route: - destination: host: demoapp subset: v10

加header头的请求:

# curl -I -H "x-canary: true" demoapp:8080 HTTP/1.1 200 OK content-type: text/html; charset=utf-8 content-length: 113 server: envoy date: Mon, 28 Feb 2022 07:51:38 GMT x-envoy-upstream-service-time: 8 x-canary: true # 响应头 # curl -H "x-canary: true" demoapp:8080/user-agent User-Agent: Chrome

不加header头的请求:

# curl -I demoapp:8080 HTTP/1.1 200 OK content-type: text/html; charset=utf-8 content-length: 114 server: envoy date: Mon, 28 Feb 2022 07:53:43 GMT x-envoy-upstream-service-time: 26 x-envoy: test

故障注入:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: canary match: - uri: prefix: /canary rewrite: uri: / route: - destination: host: demoapp subset: v11 fault: abort: # 中断故障 percentage: value: 20 # 流量比例 httpStatus: 555 # 响应客户端的响应码 - name: default route: - destination: host: demoapp subset: v10 fault: delay: # 延迟故障 percentage: value: 20 fixedDelay: 3s

重试:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: proxy spec: hosts: - "demoapp.ops.net" gateways: - istio-system/proxy-gateway # - mesh http: - name: default route: - destination: host: proxy timeout: 1s # 超时时间 retries: # 重试,因为加入了重试,所以实际请求成功率会高于故障注入的50% attempts: 5 # 重试次数 perTryTimeout: 1s # 重试超时 retryOn: 5xx,connect-failure,refused-stream # 重试条件 --- # demoapp apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: canary match: - uri: prefix: /canary rewrite: uri: / route: - destination: host: demoapp subset: v11 fault: abort: percentage: value: 50 # 改成50%了 httpStatus: 555 - name: default route: - destination: host: demoapp subset: v10 fault: delay: percentage: value: 50 fixedDelay: 3s

在外部调用:proxy的vs中没有加mesh,所以要在外部调用才能有效果。

while true; do curl demoapp.ops.net; sleep 0.$RANDOM; done while true; do curl demoapp.ops.net/canary; sleep 0.$RANDOM; done

流量镜像:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: traffic-mirror route: - destination: host: demoapp subset: v10 mirror: # 流量镜像到那个服务 host: demoapp subset: v11

demoapp示例

示例1:怎么调试,怎么找问题。

demoapp version:1.0:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: demoapp name: demoappv10 spec: replicas: 3 selector: matchLabels: app: demoapp version: v1.0 template: metadata: labels: app: demoapp version: v1.0 spec: containers: - image: ikubernetes/demoapp:v1.0 name: demoapp env: - name: PORT value: "8080" --- apiVersion: v1 kind: Service metadata: labels: app: demoapp name: demoappv10 spec: ports: - name: 8080-8080 port: 8080 protocol: TCP targetPort: 8080 selector: app: demoapp type: ClusterIP

查看proxy-status:

]# istioctl proxy-status NAME CDS LDS EDS RDS ISTIOD VERSION demoappv10-5c497c6f7c-76gs7.default SYNCED SYNCED SYNCED SYNCED istiod-765596f7ff-vk6b5 1.12.2 demoappv10-5c497c6f7c-k6vpw.default SYNCED SYNCED SYNCED SYNCED istiod-765596f7ff-vk6b5 1.12.2 demoappv10-5c497c6f7c-w25b2.default SYNCED SYNCED SYNCED SYNCED istiod-765596f7ff-vk6b5 1.12.2 istio-egressgateway-c9cbbd99f-7c6px.istio-system SYNCED SYNCED SYNCED NOT SENT istiod-765596f7ff-vk6b5 1.12.2 istio-ingressgateway-7c8bc47b49-2xcpt.istio-system SYNCED SYNCED SYNCED SYNCED istiod-765596f7ff-vk6b5 1.12.2

部署之后查看 listeners、routes、clusters、endpoints:

]# istioctl proxy-config listeners demoappv10-5c497c6f7c-76gs7 ]# istioctl proxy-config routes demoappv10-5c497c6f7c-76gs7 ]# istioctl proxy-config clusters demoappv10-5c497c6f7c-76gs7 ]# istioctl proxy-config endpoints demoappv10-5c497c6f7c-76gs7

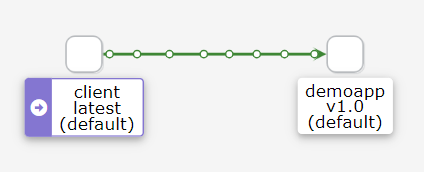

在网格内访问demoapp服务:

]# kubectl run client --image=ikubernetes/admin-box -it --rm --restart=Never --command -- /bin/sh root@client # curl demoappv10:8080 iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-76gs7, ServerIP: 10.244.3.207! # 查看信息 root@client # curl localhost:15000/listeners root@client # curl localhost:15000/clusters # 访问demoappv10服务,内部调用服务 root@client # while true; do curl demoappv10:8080; sleep 0.$RANDOM; done

效果如下:

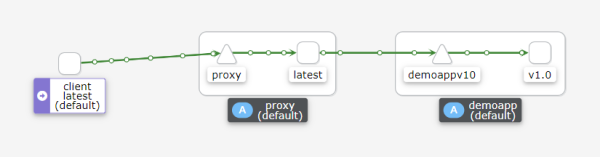

部署proxy服务:这个服务会调用demoapp服务

apiVersion: apps/v1 kind: Deployment metadata: labels: app: proxy name: proxy spec: progressDeadlineSeconds: 600 replicas: 1 selector: matchLabels: app: proxy template: metadata: labels: app: proxy spec: containers: - image: ikubernetes/proxy:v0.1.1 imagePullPolicy: IfNotPresent name: proxy env: - name: PROXYURL value: http://demoappv10:8080 ports: - containerPort: 8080 name: web protocol: TCP --- apiVersion: v1 kind: Service metadata: labels: app: proxy name: proxy spec: ports: - name: http-80 port: 80 protocol: TCP targetPort: 8080 selector: app: proxy type: ClusterIP

在集群内部的客户端中调用proxy服务:

]# kubectl run client --image=ikubernetes/admin-box -it --rm --restart=Never --command -- /bin/sh root@client # while true; do curl proxy; sleep 0.$RANDOM; done

效果如下:

示例2:内部服务调用内部服务,并让流量均衡流入demoapp10和demoapp11。

这个示例和上面的没什么关系。

demoapp10:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: demoapp name: demoappv10 spec: replicas: 3 selector: matchLabels: app: demoapp version: v1.0 template: metadata: labels: app: demoapp version: v1.0 spec: containers: - image: ikubernetes/demoapp:v1.0 name: demoapp env: - name: PORT value: "8080"

demoapp11:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: demoapp name: demoappv11 spec: replicas: 3 selector: matchLabels: app: demoapp version: v1.1 template: metadata: labels: app: demoapp version: v1.1 spec: containers: - image: ikubernetes/demoapp:v1.0 name: demoapp env: - name: PORT value: "8080"

demoapp-svc:

apiVersion: v1 kind: Service metadata: labels: app: demoapp name: demoapp spec: ports: - name: 8080-8080 port: 8080 protocol: TCP targetPort: 8080 selector: app: demoapp

proxy:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: proxy name: proxy spec: replicas: 1 selector: matchLabels: app: proxy template: metadata: labels: app: proxy spec: containers: - image: ikubernetes/proxy:v0.1.1 imagePullPolicy: IfNotPresent name: proxy env: - name: PROXYURL value: http://demoapp:8080 ports: - containerPort: 8080 name: web protocol: TCP --- apiVersion: v1 kind: Service metadata: labels: app: proxy name: proxy spec: ports: - name: http-80 port: 80 protocol: TCP targetPort: 8080 selector: app: proxy type: ClusterIP

在集群内调用demoapp服务:

]# kubectl run client --image=ikubernetes/admin-box -it --rm --restart=Never --command -- /bin/sh root@client # while true; do curl demoapp:8080; sleep 0.$RANDOM; done

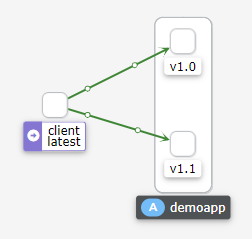

调用demoapp的效果:

集群内客户端调用proxy:

root@client # while true; do curl proxy; sleep 0.$RANDOM; done Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv11-d69f8f748-tq442, ServerIP: 10.244.3.210! - Took 13 milliseconds.

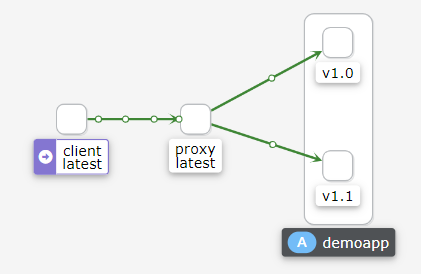

调用proxy的效果:

路由到不同的svc:每个svc有不同的一组Pod,通过选择不同的svc来选择不同的Pod。

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: canary # 这个规则路由到demoappv11 match: - uri: prefix: /canary rewrite: uri: / route: - destination: host: demoappv11 - name: default # 这个规则路由到demoappv10 route: - destination: host: demoappv10

根据dm标签路由到不同的deployment:

用标签选择器来选择不同的Pod,这样就不用为每组pod定义不同的svc了。

vs规则:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: demoapp spec: hosts: - demoapp http: - name: canary match: - uri: prefix: /canary rewrite: uri: / route: - destination: host: demoapp subset: v11 # 在这里选择子集 - name: default route: - destination: host: demoapp subset: v10

dr规则:

apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: demoapp spec: host: demoapp subsets: # 在这里定义子集 - name: v10 labels: version: v1.0 # 每个子集选择不同的标签,这里的labels选择的是deployment里面的标签 - name: v11 labels: version: v1.1

version: v1.1 的deployment:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: demoapp name: demoappv11 spec: replicas: 3 selector: matchLabels: app: demoapp version: v1.1 template: metadata: labels: app: demoapp version: v1.1 spec: containers: - image: ikubernetes/demoapp:v1.0 name: demoapp env: - name: PORT value: "8080"

version: v1.0 的deployment:

apiVersion: apps/v1 kind: Deployment metadata: labels: app: demoapp name: demoappv10 spec: replicas: 3 selector: matchLabels: app: demoapp version: v1.0 template: metadata: labels: app: demoapp version: v1.0 spec: containers: - image: ikubernetes/demoapp:v1.0 name: demoapp env: - name: PORT value: "8080"

但是 version: v1.1 和 version: v1.0 同属于demoapp的svc。

]# istioctl proxy-config clusters demoappv11-d69f8f748-tlwfs | grep demoapp demoapp.default.svc.cluster.local 8080 - outbound EDS demoapp.default demoapp.default.svc.cluster.local 8080 v10 outbound EDS demoapp.default demoapp.default.svc.cluster.local 8080 v11 outbound EDS demoapp.default

将服务暴露到外部:

gateway:

apiVersion: networking.istio.io/v1beta1 kind: Gateway metadata: name: proxy-gateway namespace: istio-system # 名称空间需要和ingressgateway的Pod一样 spec: selector: app: istio-ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "demoapp.ops.net"

VirtualService:

apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: proxy spec: hosts: - "demoapp.ops.net" gateways: - istio-system/proxy-gateway - mesh # 加mesh让集群内也可以通过 demoapp.ops.net名称来访问服务,不加的话直接访问svc名称 http: - name: default route: - destination: host: proxy