Prometheus Operator 安装使用 数据持久化

部署Prometheus Operator

注意:Prometheus Operator的所有配置都不能通过配置原生的prometheus配置文件来配置,需要通过操作CRD资源,比如配置一个报警需要 kubectl edit prometheusrules.monitoring.coreos.com -n monitoring 开配置,而不是配置configmap资源。

kubectl get crd NAME CREATED AT alertmanagerconfigs.monitoring.coreos.com 2021-01-07T09:43:11Z alertmanagers.monitoring.coreos.com 2021-01-07T09:43:11Z podmonitors.monitoring.coreos.com 2021-01-07T09:43:12Z probes.monitoring.coreos.com 2021-01-07T09:43:12Z prometheuses.monitoring.coreos.com 2021-01-07T09:43:12Z prometheusrules.monitoring.coreos.com 2021-01-07T09:43:12Z servicemonitors.monitoring.coreos.com 2021-01-07T09:43:13Z thanosrulers.monitoring.coreos.com 2021-01-07T09:43:13Z

servicemonitors 配置抓取metrics指标,servicemonitors->service->endpoints,类似prometheus.yml

prometheusrules 写报警规则用的,类似rule.yml

alertmanagers 控制alertmanager应用程序,可修改alertmanager的副本数

prometheuses 控制prometheus应用程序,可修改prometheus的副本数

官方文档:

https://github.com/coreos/prometheus-operator/blob/master/Documentation/api.md

资源文件:

https://github.com/cnych/kubernetes-learning

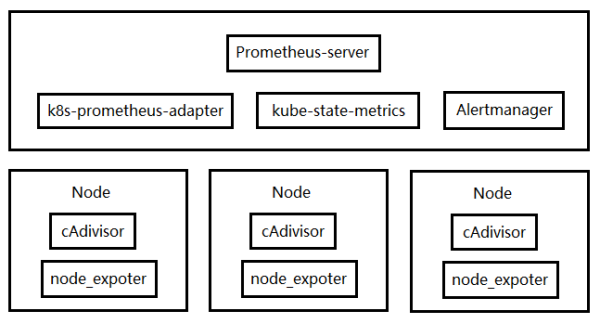

prometheus组件:

kube-state-metrics:聚合计算组件,如:Pod个数,Pod各种状态的个数

node_exporter:采集节点数据

Alertmanager:报警组件

Prometheus-Server:从metrics、cAdivisor、exporter来采集数据

k8s-prometheus-adapter:自定义指标组件

Github地址:

https://github.com/coreos/kube-prometheus

下载代码:

git clone https://github.com/coreos/kube-prometheus.git

先部署这个目录下的资源文件:这个目录的资源文件是部署CRD资源的,需要事先部署

cd kube-prometheus/manifests/setup kubectl apply -f .

再部署这个目录的资源:

cd kube-prometheus/manifests kubectl apply -f .

资源列表:

]# kubectl get pods -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-main-0 2/2 Running 4 24h alertmanager-main-1 2/2 Running 4 24h alertmanager-main-2 2/2 Running 4 24h grafana-697c9fc764-tzbvz 1/1 Running 2 24h kube-state-metrics-5b667f584-2xqd2 1/1 Running 2 24h node-exporter-6nb4s 2/2 Running 4 24h prometheus-adapter-68698bc948-szvjv 1/1 Running 2 24h prometheus-k8s-0 3/3 Running 1 87m prometheus-k8s-1 3/3 Running 1 87m prometheus-operator-7457c79db5-lw2qb 1/1 Running 2 24h

简单方式访问服务:

部署完成之后需要访问prometheus和grafana,简单的方法是修改prometheus-k8s和grafana两个svc改成NodePort方式。

kubectl edit svc prometheus-k8s -n monitoring kubectl edit svc grafana -n monitoring

使用ingressController访问服务:ingress规则如下

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: prometheus-k8s namespace: monitoring annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: rules: - host: prometheus2.chexiangsit.com http: paths: - path: / pathType: Prefix backend: service: name: prometheus-k8s port: number: 9090

调整副本数量:

如果想调整Pod数量直接修改对应的资源文件是不行的,因为prometheus-operator是通过自定义资源CRD来控制对应资源的。

调整alter-manager的Pod数量:需要修改下面这个资源文件。

kube-prometheus/manifests/alertmanager-alertmanager.yaml

调整prometheus副本数:或修改其他参数。

kube-prometheus/manifests/prometheus-prometheus.yaml

创建没有监控到的资源:

在prometheus的targets页面中可以看到kube-controller-manager和kube-schduler没有up。需要做如下修改。

http://192.168.1.71:31674/targets

创建资源文件:endpoints和对应的service资源。

需要注意的是kube-schduler 和 kube-controller-manager 需要监听在--address=0.0.0.0 地址上。

资源对应关系:servicemonitor -> service -> endpoints,具体配置详见 ServiceMonitor 资源,请求的方式http/https,选择的标签等信息要注意匹配。

ServiceMonitor资源:默认已存在不用创建,但需要修改。

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: annotations: name: kube-scheduler namespace: monitoring spec: endpoints: interval: 30s port: http-metrics scheme: http # 请求方式,默认是https,二进制集群需要修改为http tlsConfig: insecureSkipVerify: true jobLabel: app.kubernetes.io/name namespaceSelector: matchNames: - kube-system selector: matchLabels: app.kubernetes.io/name: kube-scheduler # 选择service的标签,不同prometheus operator版本默认配置是不一样的

kube-controller-manager资源:

apiVersion: v1 kind: Service metadata: name: kube-controller-manager namespace: kube-system labels: k8s-app: kube-controller-manager # app.kubernetes.io/name: kube-controller-manager spec: type: ClusterIP clusterIP: None ports: - name: http-metrics port: 10252 protocol: TCP targetPort: 10252 --- apiVersion: v1 kind: Endpoints metadata: name: kube-controller-manager namespace: kube-system labels: k8s-app: kube-controller-manager # app.kubernetes.io/name: kube-controller-manager subsets: - addresses: - ip: 192.168.1.70 ports: - name: http-metrics port: 10252 protocol: TCP

kube-scheduler的service和endpoints资源:

apiVersion: v1 kind: Service metadata: name: kube-scheduler namespace: kube-system labels: k8s-app: kube-scheduler # app.kubernetes.io/name: kube-scheduler # 新版的标签,看资源文件 kubectl edit servicemonitor -n monitoring kube-scheduler spec: type: ClusterIP clusterIP: None ports: - name: http-metrics port: 10251 targetPort: 10251 protocol: TCP --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: kube-scheduler # app.kubernetes.io/name: kube-scheduler name: kube-scheduler namespace: kube-system subsets: - addresses: - ip: 192.168.1.70 ports: - name: http-metrics port: 10251 protocol: TCP

查考地址:

https://www.servicemesher.com/blog/prometheus-operator-manual/

监控服务的基本配置

prometheus监控服务的方式为 ServiceMonitor->Service->Endpoints,不管是k8s集群内的应用还是外部应用,都可以使用这种方式来监控。

ServiceMonitor资源:

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-app: kube-scheduler name: kube-scheduler namespace: monitoring spec: endpoints: - interval: 30s port: http-metrics jobLabel: k8s-app namespaceSelector: matchNames: - kube-system selector: matchLabels: k8s-app: kube-scheduler

关键词注释:

spec.endpoints.interval:30s 每隔30秒获取一次信息

spec.endpoints.port: http-metrics 对应Service的端口名称,在service资源中的spec.ports.name中定义。

spec.namespaceSelector.matchNames: 匹配某名称空间的service,如果要从所有名称空间中匹配用any: true

spec.selector.matchLabels: 匹配Service的标签,多个标签为“与”关系

spec.selector.matchExpressions: 匹配Service的标签,多个标签是“或”关系

Service资源:

apiVersion: v1 kind: Service metadata: name: kube-scheduler namespace: kube-system labels: k8s-app: kube-scheduler spec: selector: component: kube-scheduler # 二进制k8s集群这段省略, type: ClusterIP clusterIP: None ports: - name: http-metrics port: 10251 targetPort: 10251 protocol: TCP

metadata.labels: 这下的标签要和ServiceMonitor的 spec.selector.matchLabels: 选择的标签一致。

spec.selector: 如果是二进制搭建的k8s集群这段省略,如果是kubeadm搭建的集群则是用来选择kube-scheduler的Pod的标签用的。

spec.clusterIP: 用None。

Endpoints资源:

apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: kube-scheduler name: kube-scheduler namespace: kube-system subsets: - addresses: - ip: 192.168.1.70 ports: - name: http-metrics port: 10251 protocol: TCP

Endpoints中metadata下面的内容要和Service中metadata下面的内容保持一致。

subsets.addresses.ip: 如果是高可用的集群这里填写多个kube-scheduler节点的ip

添加自定义标签: 在target页面中可以看到很多标签,可以通过重写标签的方式来添加新标签。

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: annotations: generation: 4 labels: app.kubernetes.io/component: exporter app.kubernetes.io/name: node-exporter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 1.0.1 name: node-exporter namespace: monitoring spec: endpoints: - bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token interval: 15s port: https relabelings: - action: replace regex: (.*) replacement: $1 sourceLabels: - __meta_kubernetes_pod_node_name targetLabel: instance - action: replace replacement: yuenan # 新标签的值 sourceLabels: - __meta_kubernetes_pod_uid # 重写这个标签 targetLabel: region # 新标签 scheme: https tlsConfig: insecureSkipVerify: true jobLabel: app.kubernetes.io/name selector: matchLabels: app.kubernetes.io/component: exporter app.kubernetes.io/name: node-exporter app.kubernetes.io/part-of: kube-prometheus

示例:从prometheus-operator监控外部节点。

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-app: external-nodes name: external-nodes namespace: monitoring spec: endpoints: - interval: 30s port: http-metrics jobLabel: k8s-app namespaceSelector: matchNames: - monitoring selector: matchLabels: k8s-app: external-nodes --- apiVersion: v1 kind: Service metadata: name: external-nodes namespace: monitoring labels: k8s-app: external-nodes spec: selector: k8s-app: external-nodes type: ClusterIP clusterIP: None ports: - name: http-metrics port: 9100 targetPort: 9100 protocol: TCP --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: external-nodes name: external-nodes namespace: monitoring subsets: - addresses: - ip: 172.31.33.194 nodeName: vietnam-prd - ip: 172.31.34.184 nodeName: vietnam-uat ports: - name: http-metrics port: 9100 protocol: TCP

修改监控报警配置

删除Watchdog:打开配置后搜索到相关配置删除即可。

kubectl edit prometheusrules.monitoring.coreos.com -n monitoring prometheus-k8s-rules

监控etcd

创建Secret资源:

kubectl -n monitoring create secret generic etcd-certs --from-file=etcd_client.key --from-file=etcd_client.crt --from-file=ca.crt

编辑prometheus资源添加秘钥信息:这个prometheus资源是用crd自定义的资源

kubectl edit prometheus k8s -n monitoring

添加如下secret信息:

spec: secrets: - etcd-certs

查看是否已经挂载:

kubectl exec -it -n monitoring prometheus-k8s-0 ls /etc/prometheus/secrets/etcd-certs

创建ServiceMonitor资源:这两个资源的metadata区域的内容要一致,clusterIP为 None。

apiVersion: v1 kind: Service metadata: name: etcd-k8s namespace: kube-system labels: k8s-app: etcd spec: type: ClusterIP clusterIP: None ports: - name: port port: 2379 protocol: TCP --- apiVersion: v1 kind: Endpoints metadata: name: etcd-k8s namespace: kube-system labels: k8s-app: etcd subsets: - addresses: - ip: 192.168.1.70 nodeName: etc-master # 名称任意 ports: - name: port port: 2379 protocol: TCP

示例:具体参数看官方文档,在本页面第一行。

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-etcd: etcd name: etcd namespace: kube-system spec: endpoints: - interval: 30s port: etcd-metrics # 这个名称和下面service.spec.ports.name的名称相同 scheme: https tlsConfig: caFile: /etc/prometheus/secrets/etcd-certs/ca.crt certFile: /etc/prometheus/secrets/etcd-certs/healthcheck-client.crt keyFile: /etc/prometheus/secrets/etcd-certs/healthcheck-client.key insecureSkipVerify: true jobLabel: k8s-app namespaceSelector: matchNames: - kube-system selector: matchLabels: k8s-app: etcd --- apiVersion: v1 kind: Service metadata: name: etcd-k8s namespace: kube-system labels: k8s-app: etcd spec: selector: component: etcd # 选择Pod的标签,如果是外部应用则省略 type: ClusterIP clusterIP: None ports: - name: etcd-metrics port: 2379 protocol: TCP --- apiVersion: v1 kind: Endpoints metadata: name: etcd-k8s # endpoints的名称要和service的名称相同。 namespace: kube-system labels: k8s-app: etcd subsets: - addresses: - ip: 10.65.104.112 # 对应Pod的IP列表,如果是非Pod应用也是一样的 nodeName: lf-k8s-112 ports: - name: etcd-metrics # 这个名称一般也和service的ports名称一样 port: 2379 protocol: TCP

打开prometheus的targets页面即可看到监控etcd的信息:如果有报错查看etcd监听地址是否可以被访问到。

http://192.168.1.71:31674/targets

然后到grafana官网中下载监控etcd的模板:

主页->grafana->Dashboards->左侧 Filter by->Data Source:Prometheus->Search within this list:etcd 然后在右侧就可以看到模板列表了,选择那个Etcd by Prometheus 点进去可以看到编号为 3070 的模板,下载json文件,导入模板:左侧“+”->import->Upload .json file。

https://grafana.com/grafana/dashboards

添加自定义告警

创建PrometheusRule资源:

Prometheus通过配置来匹配 alertmanager的endpoints来关联alertmanager。添加自定义监控需要创建PrometheusRule资源,并且要包含prometheus=k8s和 role=alert-rules这两个标签,因为Prometheus通过这两个标签选择此资源对象。

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: etcd-rules

namespace: monitoring

labels:

prometheus: k8s

role: alert-rules

spec:

groups:

- name: etcd

rules:

- alert: EtcdClusterUnavailable

annotations:

summary: etcd cluster small

expr: |

count(up{job="etcd"} == 0) > (count(up{job="etcd"}) / 2 - 1 )

for: 3m

labels:

severity: critical查看PrometheusRule资源是否已经挂载在prometheus目录下:

kubectl exec -n monitoring -it prometheus-k8s-0 ls /etc/prometheus/rules/prometheus-k8s-rulefiles-0/

查看是否生效:到prometheus的web页面查看alerts页面是否多出个 EtcdClusterUnavailable 监控项。

http://192.168.1.71:31674/alerts

配置AlertManager告警

其他资源不变还是coreos官方的那些配置,主要修改secret资源,将原有的删除,使用如下的secret资源。

邮件告警:

alertmanager-secret.yaml:配置完成且配置正确之后即可在对应的邮箱中收到报警信息。

apiVersion: v1

data: {}

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

smtp_smarthost: "smtp.163.com:465"

smtp_from: "chuxiangyi_com@163.com"

smtp_auth_username: "chuxiangyi_com@163.com"

smtp_auth_password: "password"

smtp_require_tls: false

route:

group_by: ["alertname","cluster"] # 告警分组,标签下的值相同的情况下合并成告警组

group_wait: 30s # 在这个时间段内收到的同组的告警将合成一个告警

group_interval: 5m # 同组告警时间间隔

repeat_interval: 10m # 重复报警时间间隔

receiver: "default-receiver" # 选择下面任意一种报警方式

receivers:

- name: "default-receiver"

email_configs: # 邮件报警

- to: "myEmail@qq.com"

- name: 'web.hook'

webhook_configs: # webhook方式发送报警信息

- url: 'http://127.0.0.1:5001/'

type: Opaque查看配置是否被加载:将alertmanager的service改为NodePort方式。

http://192.168.1.71:30808/#/status

除了邮件报警还可以配置微信报警,钉钉报警等。

使用外部alertmanager:

一般公司不止有一个环境,多套环境可共用一套alertmanager,需要配置外部alertmanager报警。

查看service资源:

$kubectl get svc -n monitoring alertmanager-main -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

alertmanager: main

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: v0.21.0

spec:

clusterIP: 10.107.202.102

clusterIPs:

- 10.107.202.102

ports:

- name: web

port: 9093

protocol: TCP

targetPort: web

selector:

alertmanager: main

app: alertmanager

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

type: ClusterIP

status:

loadBalancer: {}编辑endpoints资源:

$kubectl edit endpoints -n monitoring alertmanager-main apiVersion: v1 kind: Endpoints metadata: annotations: labels: # 标签要和service资源的对应匹配 alertmanager: main app.kubernetes.io/component: alert-router app.kubernetes.io/name: alertmanager app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: v0.21.0 name: alertmanager-main namespace: monitoring subsets: - addresses: - ip: 10.32.215.16 # 外部的报警地址 ports: - name: web # 名称要和service资源对应 port: 9093 protocol: TCP

钉钉告警:

dingtalk的资源部署yaml文件:

https://github.com/zhuqiyang/dingtalk-yaml

prometheus operator 的告警地址配置在资源文件 alertmanager-secret.yaml 中。

告警路由:

会优先匹配子路由,子路由匹配不到后使用默认告警方式。通过告警中定义的标签来匹配告警。

apiVersion: v1

data: {}

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

smtp_smarthost: "smtp.163.com:465"

smtp_from: "chuxiangyi_com@163.com"

smtp_auth_username: "chuxiangyi_com@163.com"

smtp_auth_password: "123456"

smtp_require_tls: false

route:

group_by: ["alertname"]

group_wait: 30s

group_interval: 5m

repeat_interval: 10m

receiver: "web.hook"

routes:

- match_re: # 正则匹配,alertname标签的值被正则匹配到使用web.hook的报警方式

alertname: "^(KubeCPUOvercommit|KubeMemOvercommit)$"

receiver: "web.hook"

- match: # 当alertname的值为定义的值时使用ops-email的告警方式

alertname: "kube-scheduler-Unavailable"

receiver: "ops-email"

receivers:

- name: "ops-email"

email_configs:

- to: "250994559@qq.com" # 多个地址用逗号隔开

- name: "web.hook"

webhook_configs:

- url: "http://dingtalk.default.svc.cluster.local:5001"

type: Opaque配置告警规则:

具体的告警规则需要在Prometheus的配置中定义,使用 Prometheus Operator部署的监控可以在 prometheus-rules.yaml 资源文件中修改。

资源概览模板

k8s各种资源概览的Grafana模板:

https://grafana.com/dashboards/6417 https://grafana.com/grafana/dashboards/13105

自动发现

如果逐个定义ServiceMonitor资源来监控k8s中的资源是非常麻烦的,但是可以通过自动发现机制来进行监控。

在需要监控的资源的annotation中添加如下定义:

annotation: prometheus.io/scrape=true

下面演示如何配置自动发现:

创建prometheus-additional.yaml文件:

- job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name

用上面的配置来创建secret文件:

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

将 kube-prometheus/manifests/prometheus-prometheus.yaml 文件修改为如下:

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

image: quay.io/prometheus/prometheus:v2.15.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

additionalScrapeConfigs:

name: additional-configs

key: prometheus-additional.yaml

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.15.2其实就是添加了如下配置:

additionalScrapeConfigs: name: additional-configs key: prometheus-additional.yaml

应用此配置:

kubectl apply -f prometheus-prometheus.yaml

然后到prometheus->status->configuration中查看是否已经拥有上面的配置。

此时虽然已经有配置了但是还缺少相应的权限:

kubectl logs -f prometheus-k8s-0 prometheus -n monitoring

需要添加权限:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus-k8s rules: - apiGroups: - "" resources: - nodes - services - endpoints - pods - nodes/proxy verbs: - get - list - watch - apiGroups: - "" resources: - configmaps - nodes/metrics verbs: - get - nonResourceURLs: - /metrics verbs: - get

过一会然后到prometheus->status->Target中查看:看是否有如下监控项了。

kubernetes-service-endpoints (1/1 up)

如果带有端口的资源如redis则需要添加端口的信息:

annotations: prometheus.io/scrape: "true" prometheus.io/port: "9121"

其他资源如Pod、Ingress等都可以用这样的方式。

其他资料:

https://github.com/YaoZengzeng/KubernetesResearch/blob/master/Prometheus%E5%91%8A%E8%AD%A6%E6%A8%A1%E5%9E%8B%E5%88%86%E6%9E%90.md

Prometheus Operator 数据持久化

NFS方式

使用NFS作为持久化存储:

先在任意主机上创建好nfs服务,然后在k8s中部署如下几个资源,这里用到的是nfs-client-provisioner,官方文档如下:

https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client

新地址:

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

需要部署的几个文件:

nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false"

rbac.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

deployment.yaml:注意修改nfs服务地址和目录

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs # 如果有多个storageclass和多个nfs这里的值要不一样 - name: NFS_SERVER value: 192.168.0.71 - name: NFS_PATH value: /opt/nfs volumes: - name: nfs-client-root nfs: server: 192.168.0.71 path: /opt/nfs

prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

retention: "30d" # 数据保存时间

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

storage: # 添加storage信息

volumeClaimTemplate:

spec:

storageClassName: managed-nfs-storage # 这个名称要和创建的storageclass名称一致

resources:

requests:

storage: 20Gi

image: quay.io/prometheus/prometheus:v2.15.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

additionalScrapeConfigs:

name: additional-configs

key: prometheus-additional.yaml

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.15.2 如果手动创建Pod测试需要手动创建pvc资源文件如下,如果想要删除pv则先要让应用停止使用存储,再删除pvc,删除pvc之后pv会自动删除。

nfs-pvc.yaml

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: prometheus-k8s-db-prometheus-k8s-0 namespace: monitoring annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: prometheus-k8s-db-prometheus-k8s-1 namespace: monitoring annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi

Ceph RBD方式

使用Ceph rbd作为持久化存储:

创建完StorageClass之后填写名称到prometheus-prometheus.yaml资源文件中即可。

apiVersion: v1 kind: Secret metadata: name: ceph-admin-secret namespace: monitoring data: key: QVFBbjVuVmVSZDJrS3hBQUlRZE9xcDkrSlQrVStzQUhIbVMzWGc9PQ== type: kubernetes.io/rbd --- apiVersion: v1 kind: Secret metadata: name: ceph-k8s-secret namespace: monitoring data: key: QVFCWEhuWmVSazlkSnhBQVJoenZEeUpnR1hFVDY4dzc0WW9KVmc9PQ== type: kubernetes.io/rbd --- apiVersion: storage.k8s.io/v1beta1 kind: StorageClass metadata: name: rbd-dynamic annotations: storageclass.beta.kubernetes.io/is-default-class: "true" provisioner: kubernetes.io/rbd reclaimPolicy: Retain parameters: monitors: 192.168.0.34:6789 adminId: admin adminSecretName: ceph-admin-secret adminSecretNamespace: monitoring pool: kube userId: k8s userSecretName: ceph-k8s-secret

配置存储时限:监控数据要保存多少天,修改资源文件 prometheus-prometheus.yaml

spec: retention: "30d" # [0-9]+(ms|s|m|h|d|w|y) (milliseconds seconds minutes hours days weeks years)

CephFS方式

使用Cephfs作为持久化存储:

示例地址:

https://github.com/kubernetes-incubator/external-storage/tree/master/ceph/cephfs/deploy

storageclass.yaml

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: cephfs provisioner: ceph.com/cephfs parameters: monitors: 192.168.0.34:6789 adminId: admin adminSecretName: ceph-admin-secret adminSecretNamespace: cephfs claimRoot: /pvc-volumes --- apiVersion: v1 kind: Secret metadata: name: ceph-admin-secret namespace: cephfs data: key: QVFBbjVuVmVSZDJrS3hBQUlRZE9xcDkrSlQrVStzQUhIbVMzWGc9PQ== type: kubernetes.io/rbd

deployment.yaml

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-provisioner namespace: cephfs rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] - apiGroups: [""] resources: ["services"] resourceNames: ["kube-dns","coredns"] verbs: ["list", "get"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-provisioner subjects: - kind: ServiceAccount name: cephfs-provisioner namespace: cephfs roleRef: kind: ClusterRole name: cephfs-provisioner apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: cephfs-provisioner namespace: cephfs rules: - apiGroups: [""] resources: ["secrets"] verbs: ["create", "get", "delete"] - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: cephfs-provisioner namespace: cephfs roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: cephfs-provisioner subjects: - kind: ServiceAccount name: cephfs-provisioner --- apiVersion: v1 kind: ServiceAccount metadata: name: cephfs-provisioner namespace: cephfs --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: cephfs-provisioner namespace: cephfs spec: replicas: 1 strategy: type: Recreate template: metadata: labels: app: cephfs-provisioner spec: containers: - name: cephfs-provisioner image: "quay.io/external_storage/cephfs-provisioner:latest" env: - name: PROVISIONER_NAME value: ceph.com/cephfs - name: PROVISIONER_SECRET_NAMESPACE value: cephfs command: - "/usr/local/bin/cephfs-provisioner" args: - "-id=cephfs-provisioner-1" serviceAccount: cephfs-provisioner

cephfs-pvc.yaml

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: prometheus-k8s-db-prometheus-k8s-0 namespace: monitoring spec: storageClassName: cephfs accessModes: - ReadWriteMany resources: requests: storage: 2Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: prometheus-k8s-db-prometheus-k8s-1 namespace: monitoring spec: storageClassName: cephfs accessModes: - ReadWriteMany resources: requests: storage: 2Gi

注意:在使用Cephfs作为存储的时候如果prometheus-k8s-x出现 CrashLoopBackOff 的话可能是权限问题导致prometheus无权写入文件系统,只要修改 prometheus-prometheus.yaml文件中的runAsUser等选项即可。

securityContext: fsGroup: 0 runAsNonRoot: false runAsUser: 0

storage写法示例:

storage: volumeClaimTemplate: spec: storageClassName: cephfs accessModes: [ "ReadWriteMany" ] resources: requests: storage: 2Gi

prometheus Operator 监控外部应用 mysql

mysqld_exporter官方文档:

https://github.com/prometheus/mysqld_exporter

下载exporter:

wget https://github.com/prometheus/mysqld_exporter/releases/download/v0.12.1/mysqld_exporter-0.12.1.linux-amd64.tar.gz

设置exporter的密码:

mysql -uroot -p CREATE USER 'exporter'@'localhost' IDENTIFIED BY '123456'; # 赋予查看主从运行情况查看线程,及所有数据库的权限。 GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'exporter'@'localhost';

在mysql节点安装exporter:

tar -xf mysqld_exporter-0.12.1.linux-amd64.tar.gz -C /usr/local/ ln -sv mysqld_exporter-0.12.1.linux-amd64/ mysqld_exporter

创建配置文件:

vim .my.cnf [client] user=exporter password=123456

其他的一些选项:

常用参数: # 选择采集innodb --collect.info_schema.innodb_cmp # innodb存储引擎状态 --collect.engine_innodb_status # 指定配置文件 --config.my-cnf=".my.cnf

试运行:

./mysqld_exporter --config.my-cnf=.my.cnf

创建systemd文件:

[Unit] Description=https://prometheus.io After=network.target After=mysqld.service [Service] ExecStart=/usr/local/mysqld_exporter/mysqld_exporter --config.my-cnf=/usr/local/mysqld_exporter/.my.cnf Restart=on-failure [Install] WantedBy=multi-user.target

启动服务:

systemctl start mysql_exporter.service systemctl status mysql_exporter.service

查看metrics资源:

http://10.65.104.112:31880/targets

在k8s中:

写资源文件:

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-app: mysql name: mysql namespace: monitoring spec: endpoints: - interval: 30s port: http-metrics jobLabel: mysql namespaceSelector: matchNames: - kube-system selector: matchLabels: k8s-app: mysql --- apiVersion: v1 kind: Service metadata: name: mysql namespace: kube-system labels: k8s-app: mysql spec: type: ClusterIP clusterIP: None ports: - name: http-metrics port: 9104 targetPort: 9104 protocol: TCP --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: mysql name: mysql namespace: kube-system subsets: - addresses: - ip: 10.3.149.85 ports: - name: http-metrics port: 9104 protocol: TCP

应用资源到k8s集群:

kubectl apply -f mysql-export.yaml

在prometheus中查看如下页面:可以看到mysql已近在资源列表中了,看不到需要等一会。

http://10.65.104.112:31880/targets

在grafana中添加如下模板:添加后即可看到mysql overview 的dashboard页面。

https://grafana.com/grafana/dashboards/7362

如果prometheus server是独立的服务并没有运行在k8s内,则在配置文件中添加如下内容即可:

scrape_configs: # 添加作业并命名 - job_name: 'mysql' # 静态添加node static_configs: # 指定监控端 - targets: ['10.3.149.85:9104']